Features and benefits

The following we list the main features of Cogine, for the details please check the technical documentation.

| Name | Description | LangChain | Chidori | Cogine |

|---|---|---|---|---|

| Decentralized data | User's data are stored outside of the application and user controls its access permission from the applications | |||

| Interoperability | One agent can interoperate with any others even it is not developed by a same developer | limited to agents developed by a same developer and need to call API manually | limited to agents developed by a same developer and need to call API manually | |

| Multi-agent collaboration | Has standard collaborating mechanism between User, System and Agents | No standard mechanism and need to communicate case by case, also limited to a same developer | No standard mechanism and need to communicate case by case, also limited to a same developer | |

| Function/Agent-level sand-boxing | Every agent and even a function can be isolated so that multi agents come from different can be running in a same memory environment safely | |||

| Conversational computing | Code can talk to user, system or other agents to ask more information at any function location, the agent will be paused and continue to run when got response. | |||

| Logic-abstraction | We re-organized the whole program to make developers focused on logic of busniess and forget about the hardware, data and programing language abstraction. | |||

| Visual graph editing | We build a graph interaction which is as simple as Houdini and as powerful as any turning completed programming language. | |||

| Agent format standard | We defined a agent format standard to represent a turing completed agent program which can be loaded and runned dynamically so everyone can develop an agent to handle personal needs. | some runtime in-memory agent object | some runtime in-memory agent object | |

| Virtual machine & Dynamical loading and running | Can load and run dynamically |

Development comparison with LangChain and Chidori

Basically, in other framework, you have to learn Python language, have to care about the structure of the program and have to learn many architecture design (such as different specific class and its inheritance relationships. In Cogine, the only thing you are care about is your logic, no other programming burden.

- Cogine

- LangChain

- Chidori

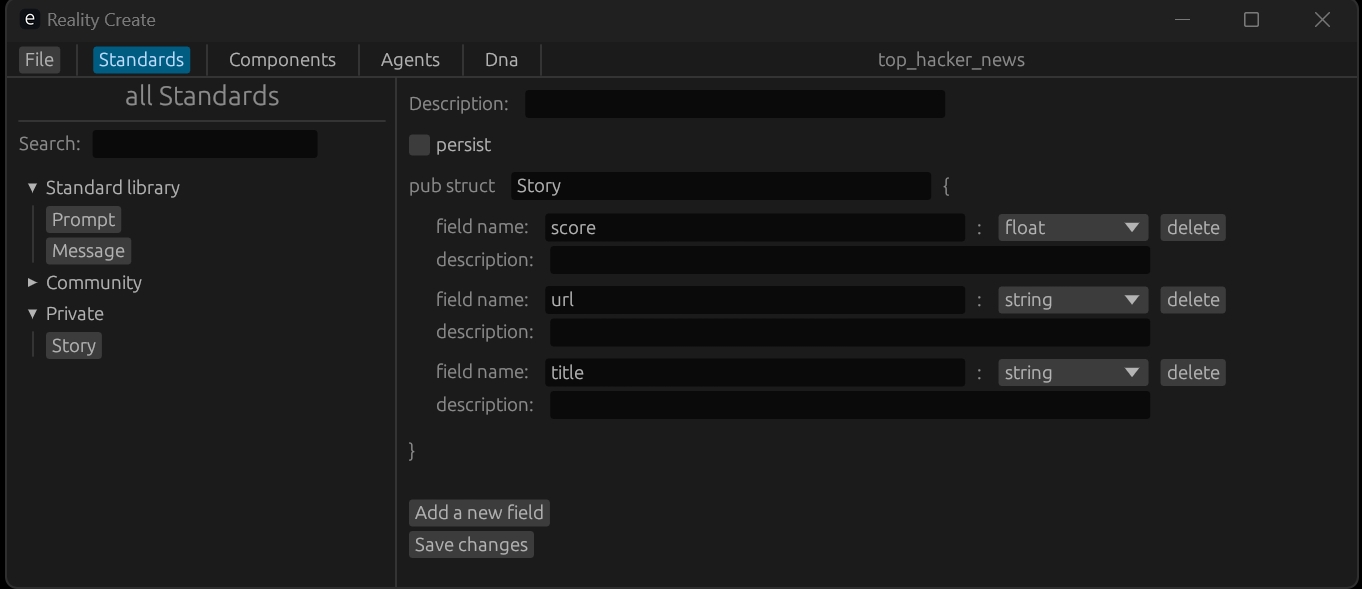

First define a Story struct with editor:

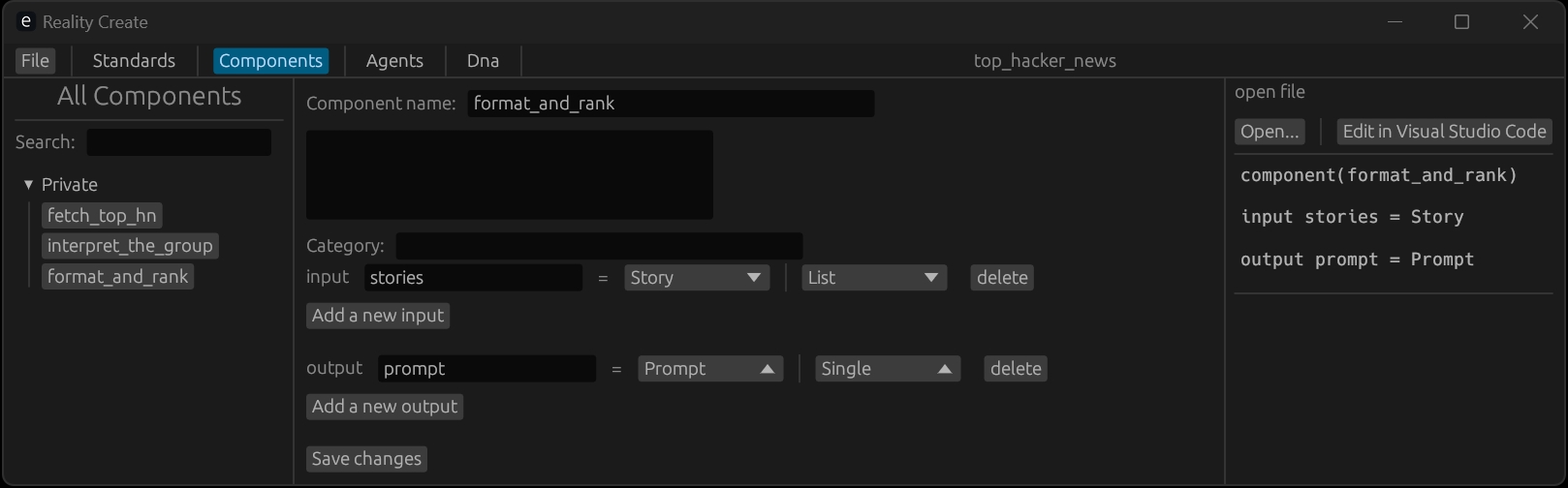

Then define your component's input and output using the above defined struct also with editor:

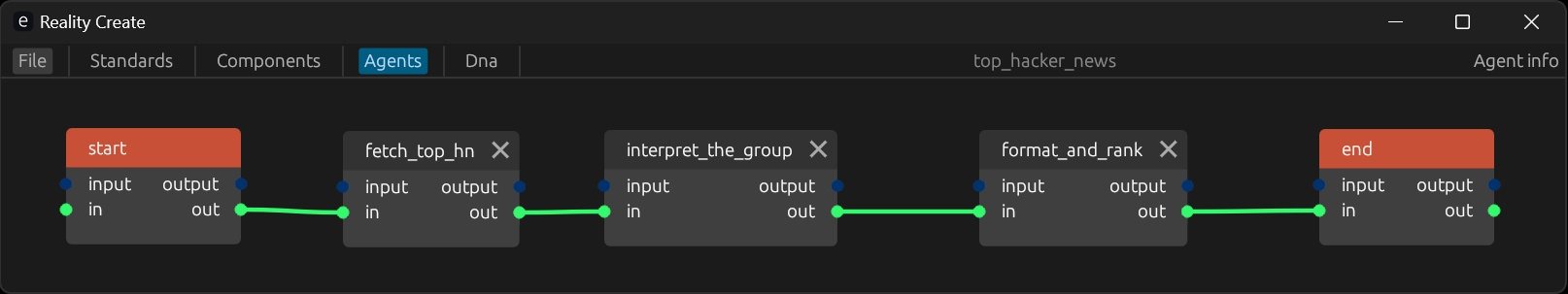

Then define your logic flow:

Finally write your component's code with Lua:

fetch_top_hn.lua:

function updating()

local story_ids = get_url("https://hacker-news.firebaseio.com/v0/topstories.json?print=pretty")

for i = 1, #story_ids do

local url = string.format("https://hacker-news.firebaseio.com/v0/item/%s.json?print=pretty", story_ids[i])

stories[i] = get_url(url)

end

end

interpret_the_group.lua:

function updating()

local prompt = string.format("Based on the following list of HackerNews threads, filter this list to only launches of new AI projects: %s", table_to_string(stories))

local result = chat_completions("Please only return the result list as a RFC8259 compliant JSON format with no '\' character, no extra information", prompt)

if result["ok"] == true then

for i = 1, #result["content"] do

local story = {}

out_stories[i] = result["content"][i]

end

end

end

format_and_rank.lua:

function updating()

local prompt = string.format("this list of new AI projects in markdown, ranking the most interesting projects from most interesting to least. %s", table_to_string(stories))

local result = chat_completions("Please only return the result list as a RFC8259 compliant JSON format with no '\' character, no extra information",prompt)

local msg = "The news with AI topics are:\n"

if result["ok"] == true then

for i = 1, #result["content"] do

msg = string.format("%s%d. %s\n",msg,i,result["content"][i]["title"])

end

end

message["content"] = msg

message["receiver"] = "user"

end

import aiohttp

import asyncio

from typing import List, Optional

import json

from chidori import Chidori, GraphBuilder

class Story:

def __init__(self, title: str, url: Optional[str], score: Optional[float]):

self.title = title

self.url = url

self.score = score

HN_URL_TOP_STORIES = "https://hacker-news.firebaseio.com/v0/topstories.json?print=pretty"

async def fetch_story(session, id):

async with session.get(f"https://hacker-news.firebaseio.com/v0/item/{id}.json?print=pretty") as response:

return await response.json()

async def fetch_hn() -> List[Story]:

async with aiohttp.ClientSession() as session:

async with session.get(HN_URL_TOP_STORIES) as response:

story_ids = await response.json()

tasks = []

for id in story_ids[:30]: # Limit to 30 stories

tasks.append(fetch_story(session, id))

stories = await asyncio.gather(*tasks)

stories_out = []

for story in stories:

story_dict = {k: story.get(k, None) for k in ('title', 'url', 'score')}

stories_out.append(Story(**story_dict))

return stories_out

# ^^^^^^^^^^^^^^^^^^^^^^^^^^^

# Methods for fetching hacker news posts via api

class ChidoriWorker:

def __init__(self):

self.c = Chidori("0", "http://localhost:9800")

async def build_graph(self):

g = GraphBuilder()

# Create a custom node, we will implement our

# own handler for this node type

h = await g.custom_node(

name="FetchTopHN",

node_type_name="FetchTopHN",

output="{ output: String }"

)

# A prompt node, pulling in the value of the output from FetchTopHN

# and templating that into the prompt for GPT3.5

h_interpret = await g.prompt_node(

name="InterpretTheGroup",

template="""

Based on the following list of HackerNews threads,

filter this list to only launches of new AI projects: {{FetchTopHN.output}}

"""

)

await h_interpret.run_when(g, h)

h_format_and_rank = await g.prompt_node(

name="FormatAndRank",

template="""

Format this list of new AI projects in markdown, ranking the most

interesting projects from most interesting to least.

{{InterpretTheGroup.promptResult}}

"""

)

await h_format_and_rank.run_when(g, h_interpret)

# Commit the graph, this pushes the configured graph

# to our durable execution runtime.

await g.commit(self.c, 0)

async def run(self):

# Construct the agent graph

await self.build_graph()

# Start graph execution from the root

await self.c.play(0, 0)

# Run the node execution loop

await self.c.run_custom_node_loop()

async def handle_fetch_hn(node_will_exec):

stories = await fetch_hn()

result = {"output": json.dumps([story.__dict__ for story in stories])}

return result

async def main():

w = ChidoriWorker()

await w.c.start_server(":memory:")

await w.c.register_custom_node_handle("FetchTopHN", handle_fetch_hn)

await w.run()

if __name__ == "__main__":

asyncio.run(main())